- Ameya360 Component Supply Platform >

- Trade news >

- Cadence, Imec Disclose 3-nm Effort

Cadence, Imec Disclose 3-nm Effort

SAN JOSE, Calif. — Cadence Design Systems and the Imec research institute disclosed that they are working toward a 3-nm tapeout of an unnamed 64-bit processor. The effort aims to produce a working chip later this year using a combination of extreme ultraviolet (EUV) and immersion lithography.

So far, Cadence and Imec have created and validated GDS files using a modified Cadence tool flow. It is based on a metal stack using a 21-nm routing pitch and a 42-nm contacted poly pitch created with data from a metal layer made in an earlier experiment.

Imec is starting work on the masks and lithography, initially aiming to use double-patterning EUV and self-aligned quadruple patterning (SAQP) immersion processes. Over time, Imec hopes to optimize the process to use a single pass in the EUV scanner. Ultimately, fabs may migrate to a planned high-numerical-aperture version of today’s EUV systems to make 3-nm chips.

The 3-nm node is expected to be in production as early as 2023. TSMC announced in October plans for a 3-nm fab in Taiwan, later adding that it could be built by 2022. Cadence and Imec have been collaborating on research in the area for two years as an extension of past efforts on 5-nm devices.

“We made improvements in our digital implementation flow to address the finer routing geometry … there definitely will be some new design rules at 3 nm,” said Rod Metcalfe, a product management group director at Cadence, declining to provide specifics. “We needed to get some early visibility so when our customers do 3 nm in a few years, EDA tools will be well-defined.”

Besides the finer features, the first two layers of 3-nm chips may use different metalization techniques and metals such as cobalt, said Ryoung-han Kim, an R&D group manager at Imec. The node is also expected to use new transistor designs such as nanowires or nanosheets rather than the FinFETs used in today’s 16-nm and finer processes.

“Our work on the test chip has enabled interconnect variation to be measured and improved and the 3-nm manufacturing process to be validated,” said An Steegen, executive vice president for semiconductor technology and systems at Imec, in a press statement.

The research uses Cadence Innovus Implementation System and Genus Synthesis tools. Imec is using a custom 3-nm cell library and a TRIM metal flow. The announcement of their collaboration comes one day after Imec detailed findings of random defectsimpacting 5-nm designs.

Online messageinquiry

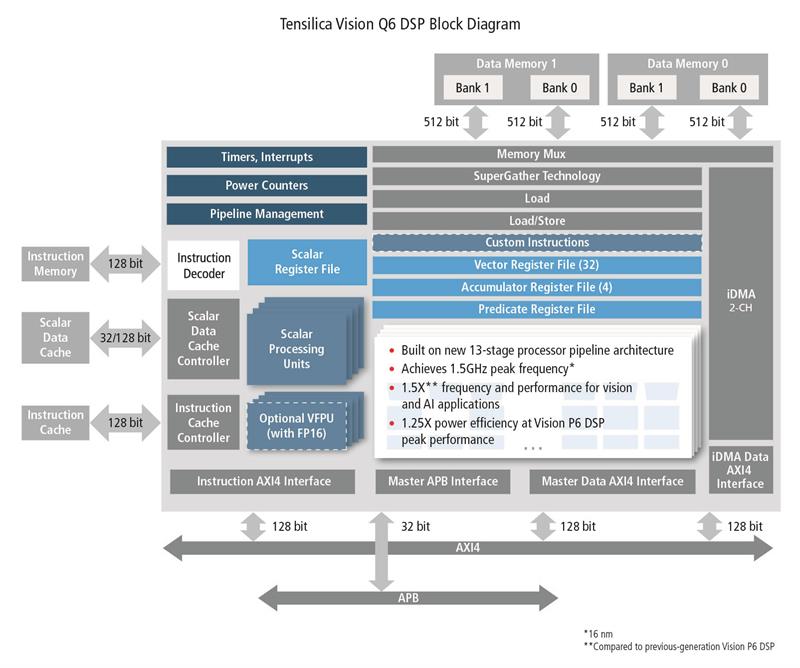

Cadence unveils Tensilica Vision Q6 DSP IP

- Week of hot material

- Material in short supply seckilling

| model | brand | Quote |

|---|---|---|

| MC33074DR2G | onsemi | |

| TL431ACLPR | Texas Instruments | |

| RB751G-40T2R | ROHM Semiconductor | |

| CDZVT2R20B | ROHM Semiconductor | |

| BD71847AMWV-E2 | ROHM Semiconductor |

| model | brand | To snap up |

|---|---|---|

| BU33JA2MNVX-CTL | ROHM Semiconductor | |

| BP3621 | ROHM Semiconductor | |

| TPS63050YFFR | Texas Instruments | |

| ESR03EZPJ151 | ROHM Semiconductor | |

| STM32F429IGT6 | STMicroelectronics | |

| IPZ40N04S5L4R8ATMA1 | Infineon Technologies |

- Week of ranking

- Month ranking

Qr code of ameya360 official account

Identify TWO-DIMENSIONAL code, you can pay attention to

Please enter the verification code in the image below: