Ameya360:STMicro Adds TinyML Developer Cloud

Parallel to its existing offline version, STMicroelectronics has put its STM32Cube.AI machine learning development environment into the cloud, complete with cloud-accessible ST MCU boards for testing.

Both versions generate optimized C code for STM32 microcontrollers from TensorFlow, PyTorch or ONNX files. The developer cloud version uses the same core tools as the downloadable version, but with added interface with ST’s github model zoo, and the ability to remotely run models on cloud-connected ST boards in order to test performance on different hardware.

“[We want] to address a new category of users: the AI community, especially data scientists and AI developers that are used to developing on online services and platforms,” Vincent Richard, AI product marketing manager at STMicroelectronics, told EE Times. “That’s our aim with the developer cloud…there is no download for the user, they go straight to the interface and start developing and testing.”

ST does not expect users to migrate from the offline version to the cloud version, since the downloadable/installable version of STM32Cube.AI is heavily adapted for embedded developers who are already using ST’s development environment for other tasks, such as defining peripherals. Data scientists and many other potential users in the AI community use a “different world” of tools, Richard said.

“We want them to be closer to the hardware, and the way to do that is to adapt our tools to their way of working,” he added.

ST’s github model zoo currently includes example models optimized for STM32 MCUs, for human motion sensing, image classification, object detection and audio event detection. Developers can use these models as a starting point to develop their own applications.

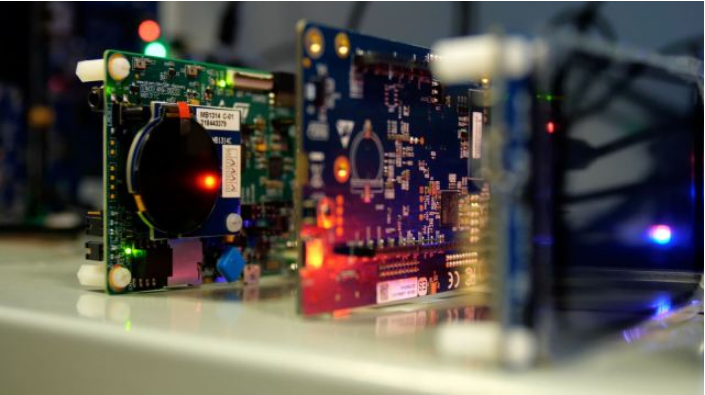

The new board farm allows users to remotely measure the performance of optimized models directly on different STM32 MCUs.

“No need to buy a bunch of STM32 boards to test AI, they can do it remotely thanks to code that is running physically on our ST board farms,” Richard said. “They can get the real latency and memory footprint measurements for inference on different boards.”

The board farm will start with 10 boards available for each STM32 part number, which will increase in the coming months, according to Richard. These boards are located in several places, separate from ST infrastructure, to ensure a stable and secure service.

Optimized code

Tools in STM32Cube.AI’s toolbox include a graph optimizer, which converts TensorFlow Lite for Microcontrollers, PyTorch or ONNX files to optimize C code based on STM32 libraries. Graphs are rewritten to optimize for memory footprint or latency, or some balance of the two that can be controlled by the user.

There is also a memory optimizer that shows graphically how much memory (Flash and RAM) each layer is using. Individual layers that are too large for memory may be split into two steps, for example.

Previous MLPerf Tiny results showed performance advantages for ST’s inference engine, an optimized version of Arm’s CMSIS-NN, versus standard CMSIS-NN scores.

The STM32CubeAI developer cloud will also support ST’s forthcoming microcontroller with in-house developed NPU, the STM32N6, when it becomes available.提交

在线留言询价

- 一周热料

- 紧缺物料秒杀

| 型号 | 品牌 | 询价 |

|---|---|---|

| MC33074DR2G | onsemi | |

| RB751G-40T2R | ROHM Semiconductor | |

| BD71847AMWV-E2 | ROHM Semiconductor | |

| CDZVT2R20B | ROHM Semiconductor | |

| TL431ACLPR | Texas Instruments |

| 型号 | 品牌 | 抢购 |

|---|---|---|

| ESR03EZPJ151 | ROHM Semiconductor | |

| IPZ40N04S5L4R8ATMA1 | Infineon Technologies | |

| TPS63050YFFR | Texas Instruments | |

| BU33JA2MNVX-CTL | ROHM Semiconductor | |

| BP3621 | ROHM Semiconductor | |

| STM32F429IGT6 | STMicroelectronics |

- 周排行榜

- 月排行榜

AMEYA360公众号二维码

识别二维码,即可关注

请输入下方图片中的验证码: